The original three landscapes were never conceived with a narrative backbone. Instead I relied on the concept of each landscape to dictate its aesthetic; meaning, by deriving a heirarchical set from either cliff/forest/swamp images were found from around the world. After slicing, distorting and scaling, pasting three imaginary landscapes were born. In order to further analyse each as a prospective site, I referenced the composing images back onto their geographic origins. Instead of a site being one stationary location, site is now redefined as a collective, fixed through the lens of a camera and uploaded into cyberspace.

23.2.09

Applying AR and HT to space

Working with Augmented Reality (AR) and Head Tracking (HT) has rooted the project in its dependency upon the image. Rather than accept the image as being a single superficial representation. AR + HT allow a composite reading of space via multiple images. Readings of inaccessible spaces are possible via HT; you control the depth of perceived space and the cone of vision, to peer around, up and above. Augmented Reality presents a different system by referencing the virtual back into the actual world. The same spatial applications can be applied, by seeing inaccessible spaces simply through an overlay.

Inside floors and walls are spaces that do not existe in everyday conciousness. These inacessable depths hold the building's organs and seem mysterious only when it comes time to repair damages. By measuring light with composite images a perception of depth and obstruction is formed. HT places the collective in an environment where we the viewer can look inside walls and under floors.

Inside floors and walls are spaces that do not existe in everyday conciousness. These inacessable depths hold the building's organs and seem mysterious only when it comes time to repair damages. By measuring light with composite images a perception of depth and obstruction is formed. HT places the collective in an environment where we the viewer can look inside walls and under floors.

Under the Floorboards

15.2.09

The what why how

This Masters project centers on the agent of change within a given environment. Presently, the project has evolved into an investigation that uses Augmented Reality [AR] as a transmitter between systems in a given setting. In adopting Augmented Reality as a tool, the Master’s project must confront the issues of AR’s spatial application and the ethics behind the projected image. Necessary to the goals of the projects is this discussion of ethics. As the projection is inherently an application into space, an environment is not affected physically at all. Within an installation involving projections, the factors are the people, sound or movement within a space; leaving no impact upon the environment. Rather than contend with a superficial result, the use of Augmented Reality desires a more invasive result. Two avenues of possible architectural application currently under study are: spatial camouflage and either inducing or alleviating a Synesthetic condition.

Camouflage is promising because of its tactical approach to space; the desire of individuals to connect with their surroundings could lead to a blending of the physical and virtual. The intention here is to venture beyond the end result being an installation piece that relies on projections. In order to do so, an understanding of the limitations of Augmented Reality is essential in order to explore it as a tool. To properly understand Augmented Reality a comparison with Virtual Reality [VR] is necessary. The user in Virtual Reality undergoes a complete immersion into a virtual environment; whereas Augmented Reality is the superimposition of information or virtual objects over the real world. AR operates by recognizing prescribed symbols in a real-time video feed. Over these symbols a virtual object is then projected. Now imagine an object in a room with its own symbol, the Augmented Reality reads the space behind the object and superimposes it over the object. No longer visible within the virtual world, the object is now camouflaged or transformed. It would seem plausible that if what was on the screen was fed back via a projector into the real room the object would be camouflaged in reality. Since AR works with a live video feed, an object in motion would be hidden as long as the camera could capture the space behind it. Parallel to the Augmented Reality concept is the Wii Remote Head Tracking1 hack. Developed by Johnny Lee while at MIT, the Wii Remote is reversed in orientation and instead searches for the user’s position in space. This extroverted system then allows the display to become three dimensional within its frame. Further investigation will occur in order to combine this tool with the intention of spatial camouflage using Augmented Reality.

The alternative application is to use the overlay between virtual and real-time time is to enduce or alleviate the condition of synesthesia. Synesthesia is the neurological condition that occurs when one sensory element crosses paths with another sensory element involuntarily. In the case of induced synesthesia, the Augmented Reality tool can be used to create a sensory environment for the user that cross pollinates sound with vision and possibly smell. Instead of the brain triggering automatic reactions in other senses, a computer is programmed to respond to sound as a series of colors or movements. Induced Synesthesia can be applied on an individual basis to either alleviate mental or physical strain. By employing Augmented Reality, the user creates his/her own environment without affecting others.

Bibliography

Hisel, Dan. Camouflage: Or the Miscommunication of Space, http://temptationbyspace.blogspot.com/2009/01/camouflage-or-miscommunication-of.html, 2002.

Lee, Johnny Chung. Johnny Chung Lee: Projects, Carnegie Mellon University http://www.cs.cmu.edu/~johnny/projects/wii/, Redmond Washington, 2008.

The Synesthetic Experience, MIT, http://web.mit.edu/synesthesia/www/, Oakbog Studios, 1997.

1 “As of June 2008, Nintendo has sold nearly 30 million Wii game consoles. This significantly exceeds the number of Tablet PCs in use today according to even the most generous estimates of Tablet PC sales. This makes the Wii Remote one of the most common computer input devices in the world. It also happens to be one of the most sophisticated. It contains a 1024x768 infrared camera with built-in hardware blob tracking of up to 4 points at 100Hz. This significantly out performs any PC "webcam" available today. It also contains a +/-3g 8-bit 3-axis accelerometer also operating at 100Hz and an expandsion port for even more capability. These projects are an effort to explore and demonstrate applications that the millions of Wii Remotes in world readily support.” Johnny Lee

13.2.09

Possibility of...

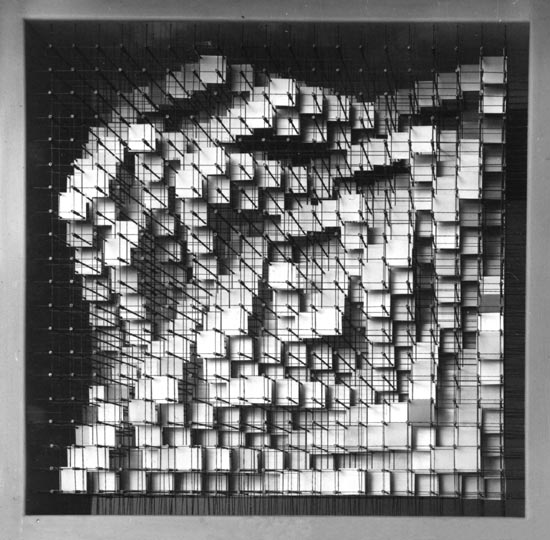

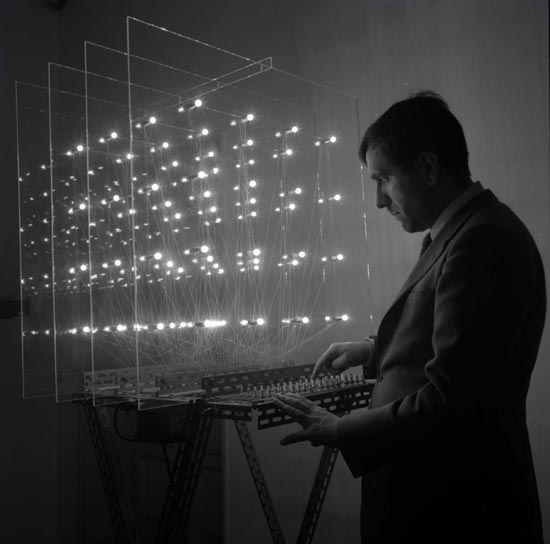

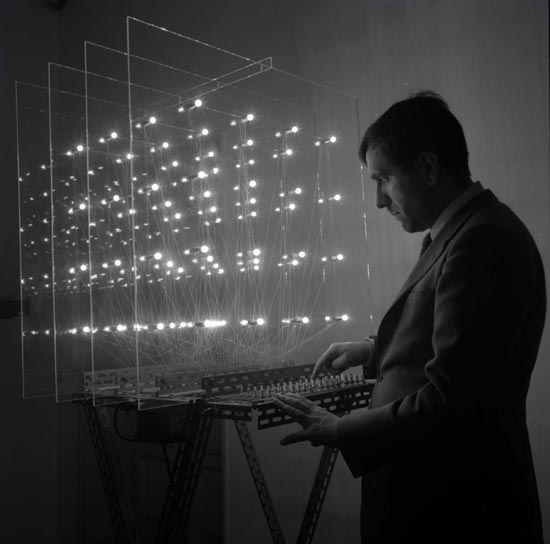

I came across the work of Enzo Mari, an Italian designer whose work spanned 50 some odd years. I find the two pictures below fascinating and way beyond their time-aesthetic. The potential for playing with depth perception is fantastic and could really help structurally with my project. Have to work it in somehow.

http://www.designboom.com/weblog/cat/8/view/4127/enzo-mari-the-art-of-design-exhibition-at-the-gam-museum-turin.html

homage to fadat', lights, switches, plexiglass and steel, 1967

homage to fadat', lights, switches, plexiglass and steel, 1967

structure, anodized aluminium, brass and steel, 1964

http://www.designboom.com/weblog/cat/8/view/4127/enzo-mari-the-art-of-design-exhibition-at-the-gam-museum-turin.html

homage to fadat', lights, switches, plexiglass and steel, 1967

homage to fadat', lights, switches, plexiglass and steel, 1967structure, anodized aluminium, brass and steel, 1964

1.2.09

For the Wiimote Augmented Reality to work, the viewer needs to be wearing InfraRed sensors. This allows one person at a time to see the projected image. This part of the project has been interesting because I am having to construct a circuit board for the LED's to work. Using the Instructables website has been helpful in order to learn a lot about wiring a light system. The next step is to setup the Wiimote relay between the remote, infrared lights, and the display.

Subscribe to:

Posts (Atom)